V-JEPA is short for Video Joint Embedding Predictive Architecture.

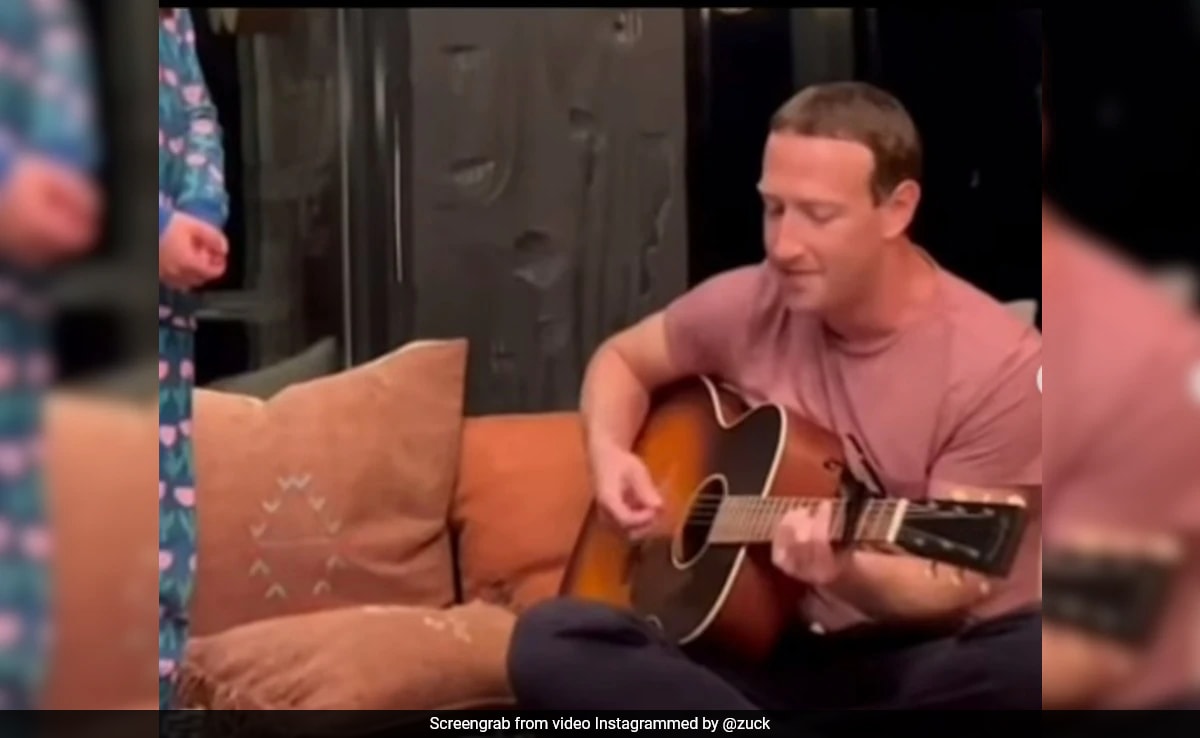

In an effort to promote Meta’s latest artificial intelligence (AI) model which learns by watching videos, Mark Zuckerberg shared an old video introducing the new project called V-JEPA. Taking to Instagram, Mr Zuckerberg shared a throwback clip in which he is seen singing and playing a song on a guitar for his daughter, Maxima. In the caption, he informed that he tested the video on the AI model V-JEPA, which according to its site is a “non-generative model that learns by predicting missing or masked parts of a video in an abstract representation space”.

“Throwback to singing one of Max’s favourite songs. I recently tested this video with a new AI model that learns about the world by watching videos. Without being trained to do this, our AI model predicted my hand motion as I strummed chords. Swipe to see the results,” Mr Zuckerberg wrote in the caption of the post.

Watch the video below:

Mr Zuckerberg shared two separate videos. In the first clip, he is seen singing and playing the song on a guitar alongside Maxima. In the second video, he shared the results of the AI model, which showed how V-JEPA predicted his hand motions while playing the guitar and filled the video’s missing parts itself.

Mr Zuckerberg shared the video just a day back and since then it has accumulated more than 51,000 likes.

Also Read | Alexei Navalny To Yevgeny Prigozhin, Here’s A List Of Putin Critics Who Died Mysteriously

Notably, V-JEPA, short for Video Joint Embedding Predictive Architecture, is a predictive analysis model that learns entirely from visual media. It can not only understand what’s going on in a video but also predict what comes next.

To train it, Meta claims to have used a new masking technology, where parts of the video were masked in both time and space, the company said in a blog post. This means that some frames in a video were entirely removed, while some other frames had blacked-out fragments, which forced the model to predict both the current frame as well as the next frame. As per the company, the model was able to do both efficiently. Notably, the model can predict and analyse videos of up to 10 seconds in length.

“For example, if the model needs to be able to distinguish between someone putting down a pen, picking up a pen, and pretending to put down a pen but not actually doing it, V-JEPA is quite good compared to previous methods for that high-grade action recognition task,” Meta said in a blog post.