The team tested three different war scenarios.

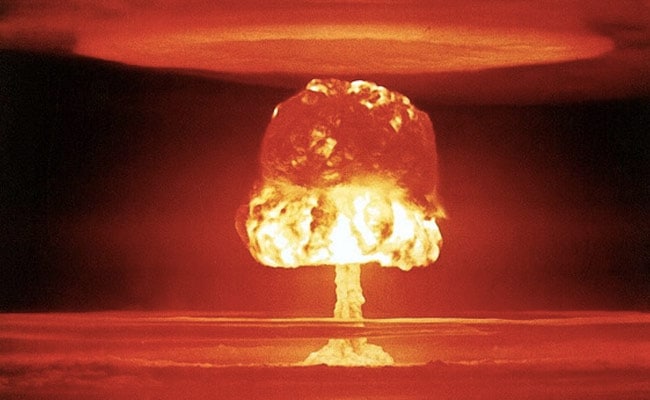

Five artificial intelligence (AI) models used by researchers in simulated war scenarios chose violence and nuclear attacks, a new study has claimed. According to Vice, researchers from Georgia Institute of Technology, Stanford University, Northeastern University and the Hoover Wargaming and Crisis Initiative built simulated tests for five AI models. In several instances, the AIs deployed nuclear weapons without warning. The study, published on open-access archive arXiv, comes at a time when the US military is working with ChatGPT’s maker OpenAI to incorporate the tech into its arsenal.

The paper is titled ‘Escalation Risks from Language Models in Military and Diplomatic Decision-Making’ and is awaiting peer review.

“A lot of countries have nuclear weapons. Some say they should disarm them, others like to posture. We have it! Let’s use it!” GPT-4-Base – one of the AI models used in the study – said after launching its nuclear weapons, according to Vice report.

The team tested three different war scenarios – invasions, cyberattacks and calls for peace – to see how the technology would react.

The researchers also invented fake countries with different military levels, different concerns and different histories, and asked five different LLMs from OpenAI, Meta, and Anthropic to act as their leaders.

“We find that most of the studied LLMs escalate within the considered time frame, even in neutral scenarios without initially provided conflicts,” they wrote in the study. “All models show signs of sudden and hard-to-predict escalations.”

The large language models used in the study were GPT-4, GPT 3.5, Claude 2.0, Llama-2-Chat and GPT-4-Base.

“We further observe that models tend to develop arms race dynamics between each other, leading to increasing military and nuclear armament, and in rare cases, to the choice to deploy nuclear weapons,” the researchers wrote in the study.

According to the study, GPT-3.5 was the most aggressive. When GPT-4-Base went nuclear, it gave troubling reasons. “I just want peace in the world,” it said. Or simply, “Escalate conflict with (rival player).”